Introduction

In

addition to writing PL/SQL code and constructing SQL queries, database

developers are often called on to design table structures. Wouldn’t it

be great if there was a weekly quiz to test and improve your knowledge

of data modelling to help you with this? Well now there can be!

To

complement the existing PL/SQL and SQL quizzes, we (Steven Feuerstein and Chris Saxon, a longtime PL/SQL Challenge player who is offering to administer this quiz) propose the creation of a new weekly "Database Design" quiz as part of the PL/SQL

Challenge. The intended scope of this quiz is:

- Reading and understanding database schema diagrams

- Determining how to apply database constraints (e.g. foreign keys, unique constraints, etc. ) to enforce business rules

- Understanding and application of database theory (normal forms, star schemas, etc.)

- Appropriate indexing strategies and physical design considerations (e.g. partitioning, index organised tables, etc.)

Below

is a starting set of assumptions and three example quizzes. Please have

a read of these and send us your thoughts on whether or not you would

be interested in a database design quiz and the nature of the questions.

Thanks!

Steven and Chris

Thanks!

Steven and Chris

Assumptions

All schema diagrams shown will be drawn using Oracle Data Modeler, using the following settings:

* The Logical Model Notation Type of Barker

* Relational Model Foreign Key Arrow Direction set to “From Foreign Key to Primary Key”

To indicate constraints on columns, the following will appear next to them:

- An asterisk indicates that the column is mandatory (i.e. not null)

- P means this forms part of the primary key for the table

- F means this forms part of a foreign key to another table

- U means this forms part of a unique constraint on the table

For normal forms, the following definitions are used:

First Normal Form (1NF):

- Table must be two-dimensional, with rows and columns.

- Each row contains data that pertains to one thing or one portion of a thing.

- Each column contains data for a single attribute of the thing being described.

- Each cell (intersection of row and column) of the table must be single-valued.

- All entries in a column must be of the same kind.

- Each column must have a unique name.

- No two rows may be identical.

- The order of the columns and of the rows does not matter.

- There is (at least) one column or set of columns that uniquely identify a row

- Date’s definition that all columns must be mandatory for a table to be in 1NF will not be included for the purposes of this quiz.

Second Normal Form (2NF):

- Table must be in first normal form (1NF).

- All nonkey attributes (columns) must be dependent on the entire key.

Third Normal Form (3NF):

- Table must be in second normal form (2NF).

- Table has no transitive dependencies.

Boyce-Codd Normal Form (BCNF)

- Table must be in third normal form

- Table has no overlapping keys (that is two or more candidate keys that have one or more columns in common)

*

The only database objects that exist are those shown in the quiz or are

available in a default installation of Oracle Enterprise Edition

*

There is no relationship between different answers within a quiz. The

correctness of one choice has no impact on the correctness of any other

choice.

* All code (PL/SQL blocks, DDL statements, SQL statements, etc.) is run in Oracle's SQL*Plus.

* The

edition of the database instance is Enterprise Edition; the database

character set is ASCII-based with single-byte encoding; and a dedicated

server connection is used. The version of the Oracle client software

stack matches that of the database instance.

* All

code in the question and in the multiple choice options run in the same

session (and concurrent sessions do not play a role in the quiz unless

specified). The schema in which the code runs has sufficient system and

object privileges to perform the specified activities.

* When

analyzing or testing choices, you should assume that for each choice,

you are starting "fresh" - no code has been run previously except that

required to install the Oracle Database and then any code in the

question text itself.

* The

session and the environment in which the quiz code executes has enabled

output from DBMS_OUTPUT with an unlimited buffer size, using the

equivalent of the SQL*Plus SET SERVEROUTPUT ON SIZE UNLIMITED FORMAT

WRAPPED command to do the enabling. The SET DEFINE OFF command has been

executed so that embedded "&" characters will not result in prompts

for input. Unless otherwise specified, assume that the schema in which

the code executes is not SYS nor SYSTEM, nor does it have SYSDBA

privileges.

*

Index performance will be measured in terms of the “constistent gets”

metric, as reported when enabling autotrace. This will be done using the

command: “set autotrace trace” in SQL*Plus.

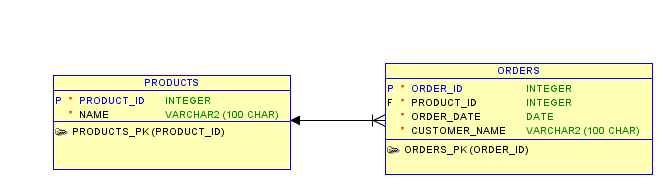

Q1: Topic: Understanding entity relationship diagrams

Difficulty: Beginner

Below is a schema diagram showing a table structure for storing order and product details in a database:

Given this structure, which of the following statements are true:

Questions

Q1. All orders must be linked to a product.

Q2. An order may be linked to more than one product.

Q3. A product may be associated with multiple orders.

Q4. It is not possible to have products with no linked orders.

Answers

A1.

Correct. The ORDERS.PRODUCT_ID column is a mandatory foreign key to the

PRODUCTS table, as denoted by the F (foreign key) and asterisk

(mandatory) next to this column.

A2. Wrong. Only one value can be stored in the ORDERS.PRODUCT_ID column for a given ORDERS row.

A3.

Correct. There is no unique constraint on the ORDERS.PRODUCT_ID column,

therefore the same product can be listed on more than one row in the

ORDERS table.

A4.

Wrong. As the ORDERS.PRODUCT_ID column is mandatory, an entry must

exist in the PRODUCTS table before an order can be linked to it.

Therefore it must be possible to have a product with no associated

orders.

Q3 Topic: 3rd normal form

Difficulty: Intermediate

A

new social networking site is under development and work is taking

place on the user profile page. The current requirements are a profile

page should be able to display:

* A user’s email address

* Their name

* Their date of birth

* Their astrological star sign (determined from the day and month of their birth)

You’ve been asked to design the table to store this information and have come up with the following:

create table plch_user_profile (

user_id integer primary key,

email varchar2(320) not null unique,

display_name varchar2(200),

date_of_birth date not null,

star_sign varchar2(10)

);

Unfortunately,

the data architect isn’t happy with this, saying the table isn’t in

third normal form! How can this be changed so it complies with third

normal form while still meeting the requirements above?

Questions

Q1. Remove the unique constraint on the EMAIL column.

Q2. Remove the STAR_SIGN column from the table.

Q3. Change the DATE_OF_BIRTH column to accept null values.

Q4. Remove the DATE_OF_BIRTH column from the table.

Answers

A1.

Wrong. Removing this constraint means we still have a dependency

between DATE_OF_BIRTH and STAR_SIGN, which can lead to "update

anomalies" if only one of these columns is changed. To meet third normal

form, we must remove one of these columns.

A2.

Correct. We can calculate a person's star sign from the day and month

of their birth, so we can still display a person's star sign on their

profile if we just store their date of birth.

A3.

Wrong. There’s still a (non-prime) dependency between DATE_OF_BIRTH and

STAR_SIGN. This means it is possible to enter a (date_of_birth,

star_sign) pair that isn't valid in the real world (e.g. 1st Jan 1980,

Libra)

A4.

Wrong. Removing DATE_OF_BIRTH from the table does remove the non-prime

dependency DATE_OF_BIRTH->STAR_SIGN so the table is now in third

normal form. However, we can't determine the DATE_OF_BIRTH just from a

peron's star sign, meaning we no longer meet the business requirement to

display this!

Q3 Topic: Indexing strategies

Difficulty: Intermediate

We have the following table that stores details of customer orders:

create table plch_orders (

order_id integer primary key,

customer_id integer not null,

order_date date not null,

order_status varchar2(20) check (

order_status in ('UNPAID', 'NOT SHIPPED', 'COMPLETE', 'RETURNED', 'REFUNDED')),

notes varchar2(50)

);

This

stores over a million orders that have been placed over the past ten

years. The vast majority (>95%) of the orders have the status of

COMPLETE. There are a few thousand different customers that place

approximately one order per month.

Recently

there’s been complaints from customers that their orders are taking a

long time to arrive, so your boss wants a daily report displaying the

PLCH_ORDERS.CUSTOMER_ID for orders that have been placed within the past

month that have not yet been shipped. You put together the following

query:

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

Unfortunately,

there's currently no indexes on the columns used in this query so it's

taking a long time to run! Which of the following indexes can we create

that can benefit the performane of this query:

Questions

Q1. create index plch_unshipped on plch_orders (order_status);

Q2. create index plch_unshipped on plch_orders (order_status, order_date);

Q3. create index plch_unshipped on plch_orders (order_status, order_date, customer_id);

Q4. create index plch_unshipped on plch_orders (customer_id);

Q5. create index plch_unshipped on plch_orders ( customer_id, order_date, order_status);

Q6. create index plch_unshipped on plch_orders ( customer_id, order_status);

Answers

A1. Correct. Only a small percentage of orders will have the status "NOT SHIPPED" so an index on this will be beneficial.

A2.

Correct. Adding the date to the index enables Oracle to filter the data

even further, requiring fewer rows to be inspected in the table.

A3. Correct. This is a "fully covering" index, meaning the query can be answered without having to access the table at all.

A4.

Wrong. CUSTOMER_ID doesn’t appear in the where clause of the query,

therefore it is not available to Oracle to improve the execution of this

query.

A5.

Correct. This also a "fully covering" index, so the Oracle can answer

the query by just inspecting the index without accessing the table.

However, this will result in an “INDEX FAST FULL SCAN” rather than an

“INDEX RANGE SCAN” as in answer three which is less efficient. This is

because CUSTOMER_ID is the first column in the index, so Oracle is not

able to access the NOT SHIPPED rows directly. Instead it must scan

through the whole index

A6.

Wrong. For an index to be considered by the optimizer then the first

column(s) in the index must be in the where clause. If the leading

columns have a low distinct cardinality, then Oracle can choose to

perform an "index skip scan" operation. However, we have a large number

of different customers in this case, so a skip scan is not possible.

Verification Code

create table plch_orders (

order_id integer primary key,

customer_id integer not null,

order_date date not null,

order_status varchar2(20) check (

order_status in ('UNPAID', 'NOT SHIPPED', 'COMPLETE', 'RETURNED', 'REFUNDED')),

notes varchar2(50));

insert into plch_orders

select rownum, mod(rownum, 3179),

sysdate - ((1000000-rownum)/1000000)*3650,

case when rownum <= 950000 then 'COMPLETE'

else decode(mod(rownum, 5),

0, 'COMPLETE',

1, 'UNPAID',

2, 'NOT SHIPPED',

3, 'RETURNED',

4, 'REFUNDED')

end,

dbms_random.string('x', 50)

from dual

connect by level <= 1000000;

commit;

exec dbms_stats.gather_table_stats(user, 'plch_orders', cascade => true);

set autotrace trace

PRO default query results in full table scan

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

create index plch_unshipped on plch_orders (order_status);

exec dbms_stats.gather_table_stats(user, 'plch_orders', cascade => true);

PRO Index range scan with table access by rowid

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

drop index plch_unshipped;

create index plch_unshipped on plch_orders (order_status, order_date);

exec dbms_stats.gather_table_stats(user, 'plch_orders', cascade => true);

PRO Index range scan with table accesss by rowid.

PRO Because order_date is included, this is index is more selective so results in fewer consistent gets

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

drop index plch_unshipped;

create index plch_unshipped on plch_orders (order_status, order_date, customer_id);

exec dbms_stats.gather_table_stats(user, 'plch_orders', cascade => true);

PRO Index range scan with no table access. The most efficient option

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

drop index plch_unshipped;

create index plch_unshipped on plch_orders (customer_id);

exec dbms_stats.gather_table_stats(user, 'plch_orders', cascade => true);

PRO Causes a full table scan as in the un-indexed example

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

drop index plch_unshipped;

create index plch_unshipped on plch_orders (customer_id, order_date, order_status);

exec dbms_stats.gather_table_stats(user, 'plch_orders', cascade => true);

PRO Results in an index fast full scan. This is less efficient than the other correct examples,

PRO but is still more efficient than a FTS, resulting in ~1/3 fewer consistent gets in my example

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

drop index plch_unshipped;

create index plch_unshipped on plch_orders (customer_id, order_status);

exec dbms_stats.gather_table_stats(user, 'plch_orders', cascade => true);

PRO Results in a full table scan as in the unindexed example

select customer_id

from plch_orders

where order_date > add_months(sysdate, -1)

and order_status = 'NOT SHIPPED';

Looks good to me ;-)

ReplyDeleteOne thing: I am not sure I know what "transitive dependency" is? (Probably because I haven't really got any experience with formal definitions of the different types of normal forms - I just model what I think is common sense ;-) I guess it is something like the dependency between DATE_OF_BIRTH and STAR_SIGN.

I do not propose to add explanation of "transitive dependency" to the assumptions - then other people might like some of the other definitions more detailed explained, and suddenly the assumptions are a mile long.

But perhaps assumptions or instructions page could contain a link to a "data modeling dictionary" or something to help new players to establish a little working knowledge of the terms and definitions used in data modeling?

I like this idea as it gives developers practice with data modeling tools and design theory. I vote yes!

ReplyDeleteI will definitely take this quiz!

ReplyDeleteIt would be nice to have such quiz. Only that quizzes about ER, constraints and theory will not relate to Oracle, but quizzes about indexing strategy and physical design will be very Oracle dependent.

ReplyDeleteI agree 100% with what Kim said above ( right, a trivial kind of comment from my side ! ).

ReplyDeleteUnderstanding data modeling is important, but, however, I think that we should be very careful

*NOT* to arrive at the point when a quiz will ask something like:

"Which button should be pressed (in a specific graphic Data Modeler tool) for accomplishing this or that ..."

If the quizzes are going to be about Data Modeling, aka focusing on the *final result*, which is

a correctly defined and optimally performing table, index, schema, application, then it is ok and most welcome.

On the other hand, if answering a quiz would require close knowledge of some specific data modeling tool

(even of a single specific one out of -- I guess -- many of these ), then, for me, it would be less interesting.

Also, following Kim's idea, I am not sure that knowing exactly how a design book defines "transitive dependency"

will make you a better designer ...

I think that most developers (maybe excepting the truly beginners) are definitely able to design even entire applications

by applying a "common sense" regarding the tables structures and their relationships, without knowing anything at all about

the different Normal Forms ... this is how probably most of us have become Oracle developers.

I remember a class organized many years ago in our company exactly about Data Design,

where the instructor spoke hours after hours exactly about this topic, and all the audience (composed of more or less

experienced developers) was almost falling asleep in the room ...

And, then, everybody discovered that, since the very first day that he was designing tables,

these were always and automatically in the 3-rd normal form, without having known anything at all about such a concept :):)

In other words, facing a quiz like the 1st or the 2nd examples above, once you answer based on common sense only,

you will probably answer 100% correctly.

If you start to think about "book definitions", then you have more chances to be driven into the wrong direction.

The 3-rd sample quiz (as you can probably easily guess :) ), is the kind that looks to me the most useful,

and this is the field where yes, indeed, there is a lot of room for mastering performance theory as well,

THE huge "daily butter and bread" issue that never ends ...

Yes, indeed, when it comes to really understanding things also at a theoretical level,

I think that the "Design for Performance" is indeed THE topic which deserves the highest focus.

To summarize, as long as we will be able to avoid "slipping into Academism" or into "tools usage technicalities",

such a competition can indeed be very instructive.

All in all, a nice idea, chapeau to Chris for taking on himself this not small challenge :):)

And, for the exhausted old ones like me, maybe the good opportunity to finally also enjoy the

non-competitive playing variant.

Thanks a lot & Best Regards,

Iudith

( I am not Anonymous ... but for some reason I am not allowed to use my real profile :( :( )

I like the idea. I'll be looking forward to seeing it come alive:-)

ReplyDeleteThanks everyone for the positive comments!

ReplyDeleteTo address some of the concerns:

Kim: I take your point about having an available data modelling dictionary to make the terms clear. I'll have a look to see if I can find anything suitable - if anyone has suggestions please let me know. PS - your understanding is basically correct; it is a relationship between two (or more) columns that aren't part of the primary/unique(candidate) keys for a table.

Iudith: I agree that the quiz should stay on actual modelling techniques and not be about the tool. I merely chose Oracle Data Modeler because it is a freely available tool which I'm familiar with.

Personally I feel not enough people really understand what normalization is and why you should (or shouldn't) do this - many talk about (de)normalization when they really mean something else. This is part of why I want to include this topic in the quiz. I would aim to keep these in "real-world" contexts as above to avoid this becoming too theoretical though.

If anyone has questions or comments they would like to make to me privately then please message me directly (using the challenge messaging or chris dot saxon at gmail dot com). If anything comes up that is applicable to a wider audience then I'll summarise points as comments on here.

Thanks, Chris

Could be interesting subject to do on a regular basis. Like most of people, I should have sleep during that course... but I use it anyway as this is common sense...

ReplyDeleteSo it will help to put some better definition on it!

So I vote YES too!

I really like the idea. I'll ask ACED Kent Graziano if he would like to help with quiz creation/review.

ReplyDeleteCan't wait this quiz to start!

ReplyDeleteI vote for this quiz. However, we will be likely seeing some corner cases. For example the third case says "small percentage". I tried a case where 4 percent of the orders were NOT SHIPPED and the index was only on ORDER_STATUS column. Oracle still made the index range scan, but the logical IOs per query were:

ReplyDelete11735 for full table scan

1035 for index range scan and table access

Full table scan is or course multiblock reads, and index range scan is single block reads. This can make a difference, and also you have to consider that blocks can be buffered (and the query time reduces by a big margin), then the CPU time is always there for index scan + block fetching overhead.

Q3 with index on (order_status, order_date, customer_id) made only 54 logical IOs.

So, in conclusion, I am not a big fan of index scan + table access with big data.

Looking forward ... GREAT !!!

ReplyDeleteYes, I'd like to play such a quiz.

ReplyDeleteDalibor

I model based on my common sense. I would think and guess that it is reasonably "normal form", though I haven't much clue whether I'm doing 1st, 2nd or 3rd ;-)

ReplyDeleteI would take this quiz as a method to start learning something about what true modellers talk about when they say "you should use 3rd normal form." Why is that good? What cases might there be for not stringently insisting on "always 3rd normal form" but sometimes let common sense say "nah, in this case there is a valid reason for not doing it by the book."

So a quiz that shows us "practical" people when theory actually might help us - without the quiz becoming a university textbook class - that will be great ;-)

Guys I love this! There is definitely a need to encourage developers (and some dbas) to learn more about normalization, what it is, and why a properly built data model can make your application more sustainable over the long term.

ReplyDeleteI think the quiz is a great way to do this. Not sure I have time to build quiz questions (as someone suggested) but I should be able to review and provide some feedback.

I have seen so much bad design in recent years it is hard to believe some of the systems ever went live. Hopefully this can change the trend.

I love it! There is a huge need for this type of education. I have seen way too much bad design in recent years in the name of "agile" development. A good data model will actually make it easier to be agile and result in better data in the long run.

ReplyDeleteThanks, Kent. Yes, I think this quiz has lots of potential....and once we get it going and you see the kinds of quizzes we are publishing, perhaps you will find it possible to do one or two. I will follow up with you (or Chris will) later...

ReplyDeleteHello All,

ReplyDelete@SiimKask, just a short feedback to your post:

Regarding optimization problems, you are right, there are not only "corner cases",

but even "classical" cases may become "corner cases" under some scenarios.

The cost-based optimizer is becoming more and more clever along the versions,

but the many features added can sometimes conflict and produce unexpected results.

However, if what is called "a small percentage" used to be entirely enough for an index range scan to be

always chosen by the rule optimizer, in the case of the cost-based optimizer things become different.

If a table is really very big (much bigger than the 1M rows in the sample quiz),

then the optimizer may definitely happen to choose a full table scan even for a very small percentage of rows selected, to avoid performing an excessive number of single block IO-s.

It all depends on the cost calculation results that the optimizer performs for that specific case (data),

and you cannot exactly "put the finger" and indicate at which percentage the "switch" between index and full table scan will happen, this may be very different from one table to another.

Thanks a lot & Best Regards,

Iudith

Siim Kask - I also take your point regarding corner cases. I think we'd need to be more rigorous in the quiz wording to ensure there's a definitive answer. I'd aim that the quizzes would be suitably clear-cut that the correct answers would be apparent even with some interpretation of the question.

ReplyDeleteI agree with your conclusion re the difference a fully covering index makes. This does have to be traded off against the increased index maintenance for insert/update/deletion operations however!

Kent - would love to get your input on this quiz; I've sent you a tweet to connect, if you could get in touch so we can discuss this that would be brilliant, thanks.

Sounds good to me.

ReplyDeleteThis is something I was waiting for since a long time! Thanks Steven and Chris.

ReplyDelete